Azure Data Factory (ADF) is a Microsoft Azure cloud service offering. It is specially designed for Data Integration and ETL Processes to create data-driven pipelines for data transformation.

In this post, we will learn about Microsoft Azure Data Factory. This service helps us to combine data from multiple resources, transform it into analytical models for visualization and reporting purposes.

We will discuss the following terms:

- What is Azure Data Factory (ADF)?

- Why do we need Azure Data Factory?

- What is Data Integration Service?

- What is ETL?

- Four Key Components of Data Factory

- How does Azure Data Factory work?

- Copy Activity in Azure Data Factory

- How to create an Azure Data Factory?

- How does ADF differ from other ETL tools?

- FAQs

- Conclusion

What is Azure Data Factory (ADF)?

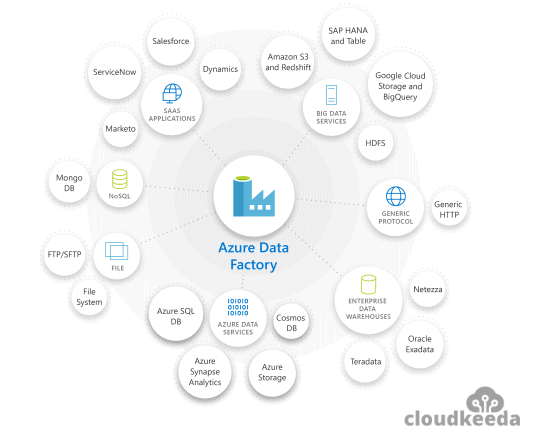

Azure Data Factory is a Data Integration Service. It takes data from one or more data sources and transforms it into a format for processing.

The data source may contain noise that needs to be filtered out. You can use the Azure Data Factory connector to get the data you want and delete the rest. Azure Data Factory ingests data and loads it into Azure Data Lake Storage from various sources.

In other words, it is a cloud-based ETL service that allows you to coordinate the movement of data and create data-driven pipelines for the large-scale transformation of data.

Why do we need Azure Data Factory?

Let’s find out why do we actually need Azure Data Factory.

- Data Factory enables all cloud projects. In almost every cloud project, you need to perform data movement activities between different networks (on-premises networks and clouds) and between different services (that is, different Azure storages).

- The Data Factory is a necessary enabler for companies looking to take the first step to the cloud and connect their on-premises data to the cloud.

- Azure Data Factory has an integrated runtime engine, an on-premises installable gateway service that guarantees high-performance, secure transfer of data to and from the cloud.

What is Data Integration Service?

- Data integration includes collecting data from one or more sources.

- It is a process that can transform and clean up the data or enhance and prepare it with additional data.

- Finally, the combined data is stored in a data platform service that handles the type of analysis to perform. This process can be automated by Azure Data Factory in an arrangement called Extract, Transform, and Load (ETL).

What is ETL?

- Extract – In this process, the data engineer defines the data and its source. Data Source: Identifies source details such as subscriptions and resource groups, as well as identity information such as secretaries and keys. Data: Define data using a set of files, database queries, or Azure Blob storage names for blob storage.

- Transform – Data transform operations include joining, splitting, adding, deriving, deleting, or pivoting columns. It maps the fields between the data target and the data source.

- Load – While loading, many Azure targets can accept data formatted as a file, JavaScript Object Notation (JSON), or blob. It tests the ETL job in a test environment. Then move the job to the production environment and load the production system.

Azure Data Factory provides both code-based and code-free users with approximately 100 enterprise connectors and robust resources to meet their data transformation and mobility needs.

Now we will discuss the main key components of Data Factory.

Four Key Components of Data Factory

Let’s have a quick look at the main four components of Azure Data Factory.

Data Factory includes four main components that work together to define the input and output data, processing events, and the schedules and resources needed to execute the desired data flow.

- Dataset represents the data structure in the data store. Input datasets are inputs to activities in the pipeline. The output dataset is the output of the activity. For example, the Azure Blob dataset identifies blob containers and folders in Azure Blob Storage where the pipeline reads the data. Alternatively, the Azure SQL table dataset specifies the table to which the output data for the activity is written.

- A pipeline is a group of activities. These are used to group activities into units that perform tasks jointly. A data factory can run multiple pipelines. For example, a pipeline can contain a set of activities that ingest data from Azure Blobs and run Hive queries on HDInsight clusters to partition the data.

- Activities define the actions to take on the data. Currently, Data Factory supports two types of activities: data movement and data transformation.

- The linked service defines the information that Data Factory needs to connect to an external resource. For instance, a service associated with Azure Storage specifies a connection string to establish a connection to your Azure Storage account.

Check out: ADF Interview qiestions

How does Azure Data Factory work?

The Data Factory service offers you to create a data pipeline, move and transform data, and then run the pipeline on a specific schedule (hourly, daily, weekly, and so on). That is, the data consumed and generated by the workflow is time slot data, and you can set the pipeline mode on schedule (once a day) or once a day.

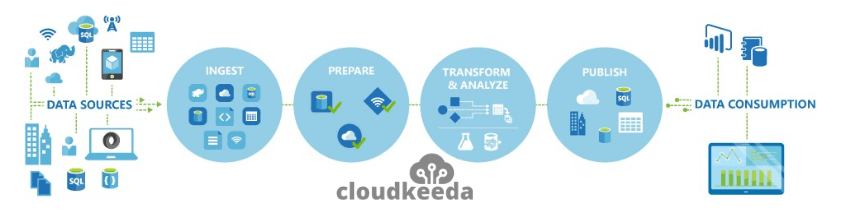

Azure Data Factory pipelines (data-driven workflows) typically perform three steps:

Connect and Collect: Connect to all required data and processing sources such as SaaS services, file shares, FTP, web services and more. The then uses the data pipeline copy activity to move the data from the local and cloud source data stores to the cloud central data store and further analyze it to centralize the data as needed.

If you have centralized data storage in the cloud, it will be converted using computing services such as HDInsight Hadoop, Spark, Data Lake Analytics, and Machine Learning.

Publish: Transfer the transformed data from the cloud to a local source such as SQL Server, or store it in a cloud storage source for use by BI, analytics tools, and other applications.

Also Check: Our blog post on Azure Certification Paths

Copy Activity in Azure Data Factory

With ADF, you can use copy activity to copy data between onsite and cloud data stores. After copying the data, you can further transform and analyze it in other activities. You can also use the DF Copy activity to publish business intelligence (BI) and application consumption transformations and findings.

Monitor Copy Activity

- Once you’ve created a pipeline and published it in ADF, you can associate it with a trigger.

- ADF user interface helps you monitor all the running pipelines.

- To monitor the execution of copy activity, go to the DF Author & Monitor user interface.

- The Monitor tab shows the number of pipelines that are running.

- Click the pipeline name link to access the list of activity runs for pipeline runs.

Delete Activity In Azure Data Factory

- Always make sure to back up your files before performing a delete operation on them with the Delete activity if you plan to restore them in future.

- Keep in mind that ADF has to write permissions to perform delete operations on files or folders or from the storage store.

How to create an Azure Data Factory?

Step 1) You need to visit the Azure Portal.

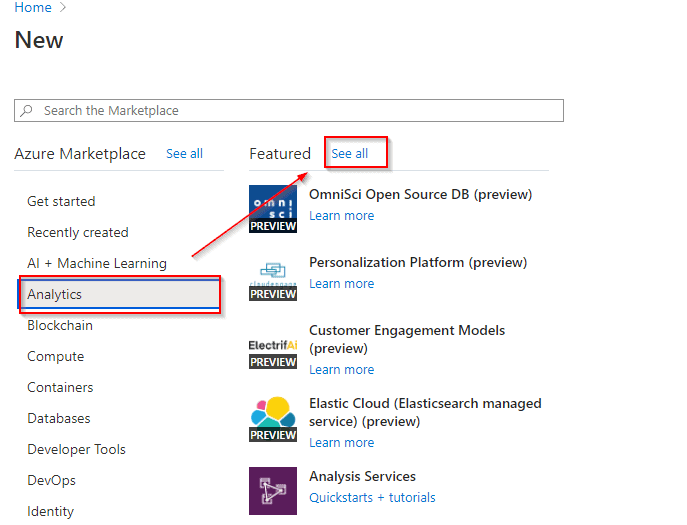

Step 2) Go to the portal menu and click on Create a resource.

Step 3) Now you need to Select Analytics. Then select see all.

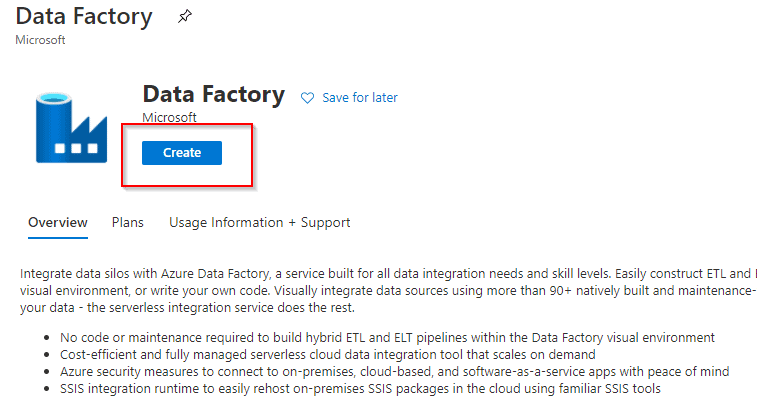

Step 4) Now select the Data Factory, and then click on Create.

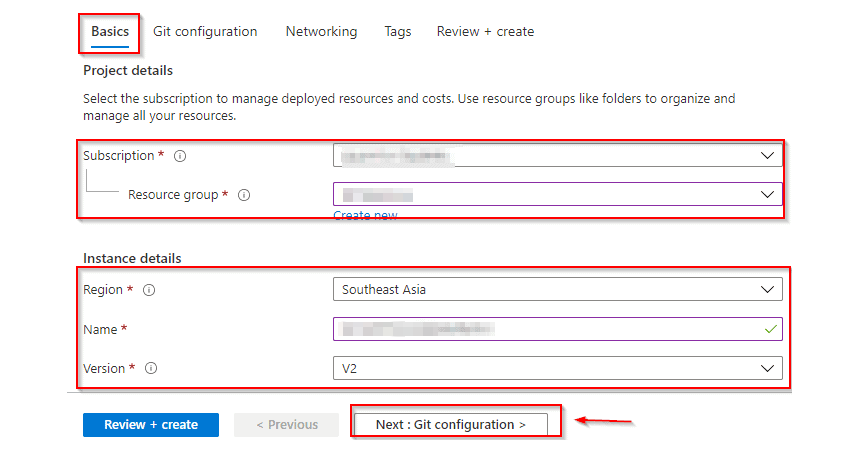

Step 5) Under the Basics Details page as shown in the image, enter the required information. Then click on Git Configuration.

Step 6) Click the Check box on the Git configuration page, and visit Networking.

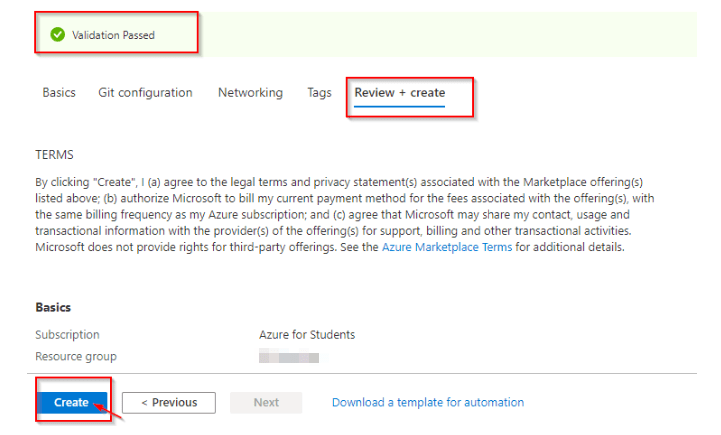

Step 7) When you reach the Networking page, don’t alter the default settings and click on Tags, and Select review and create then click on create as shown in the image.

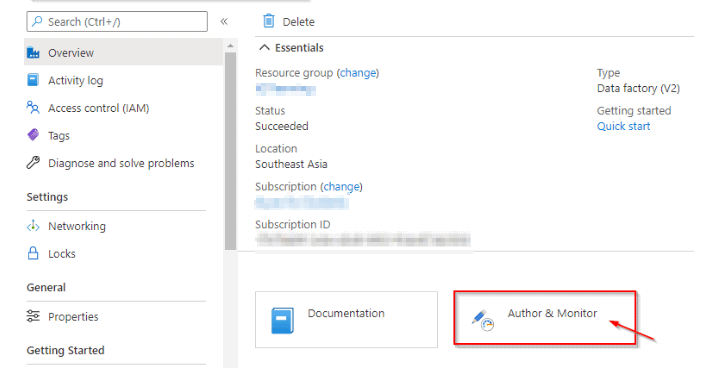

Step 8) To launch Data Factory User Interface in a new tab, Go to resource, and then Select Author & Monitor as displayed down below.

Check out: Create Azure Free Account

How does ADF differ from other ETL tools?

Now we will have a look at what makes ADF different from other ETL tools.

- Data Factory is a great option that can be used as a cloud ETL (Extract, Transform, Load) or ELT tool.

- There are many features and benefits that set Azure Data Factory apart from other tools. You can run the SSIS (SQL Server Integration Services) packages.

- Automatically scales (fully managed PaaS products) based on the specified workload.

- Seamlessly connect to on-premises and Azure cloud via a gateway.

- Can handle large amounts of data during ETL processes.

- Connect to and integrate with other computing services such as Azure Batch, HDInsights.

FAQs

Q1. Can we consider Data Factory an ETL tool?

Ans: Yes, Data Factory is a cloud-based ETL and data integration service. It helps to create workflows for moving and transforming data.

Q2. Is Azure Data Factory a Platform as a Service (PaaS) or Software as a Service (SaaS) offering?

Ans: Azure Data Factory is a Platform as a Service solution offering from Microsoft for data transformation and load. It also supports data movements between cloud-based data sources and on-premises databases.

Q3. Do we need to pay for Azure Data Factory?

Ans: Yes, you will have to pay for Azure Data Factory as per the consumption-based plan. You will only be charged for whatever services you will use for a particular time.

Conclusion

Data Factory makes it easy to integrate cloud data with on-premises data. Unique in ease of use and the ability to transform and enhance complex data. It provides scalable, available and inexpensive data integration. Today, this service is a key component of all data platforms and machine learning projects. I Hope, this Azure Data Factory tutorial has added value to kickstart your journey with Data Factory.

You are so awesome! I do not think I’ve truly read something like that before. So wonderful to discover another person with some genuine thoughts on this topic. Seriously.. thanks for starting this up. This website is something that is required on the web, someone with a bit of originality!

I loved your blog post.Really looking forward to read more. Keep writing.

one of the best blog I have ever read! Please continue writing… it’s so helpful